The Double Helix of AI Collaboration: Why Enterprise Teams Need More Than Human-in-the-Loop

The Double Helix of AI Collaboration: Why Enterprise Teams Need More Than Human-in-the-Loop

TL;DR

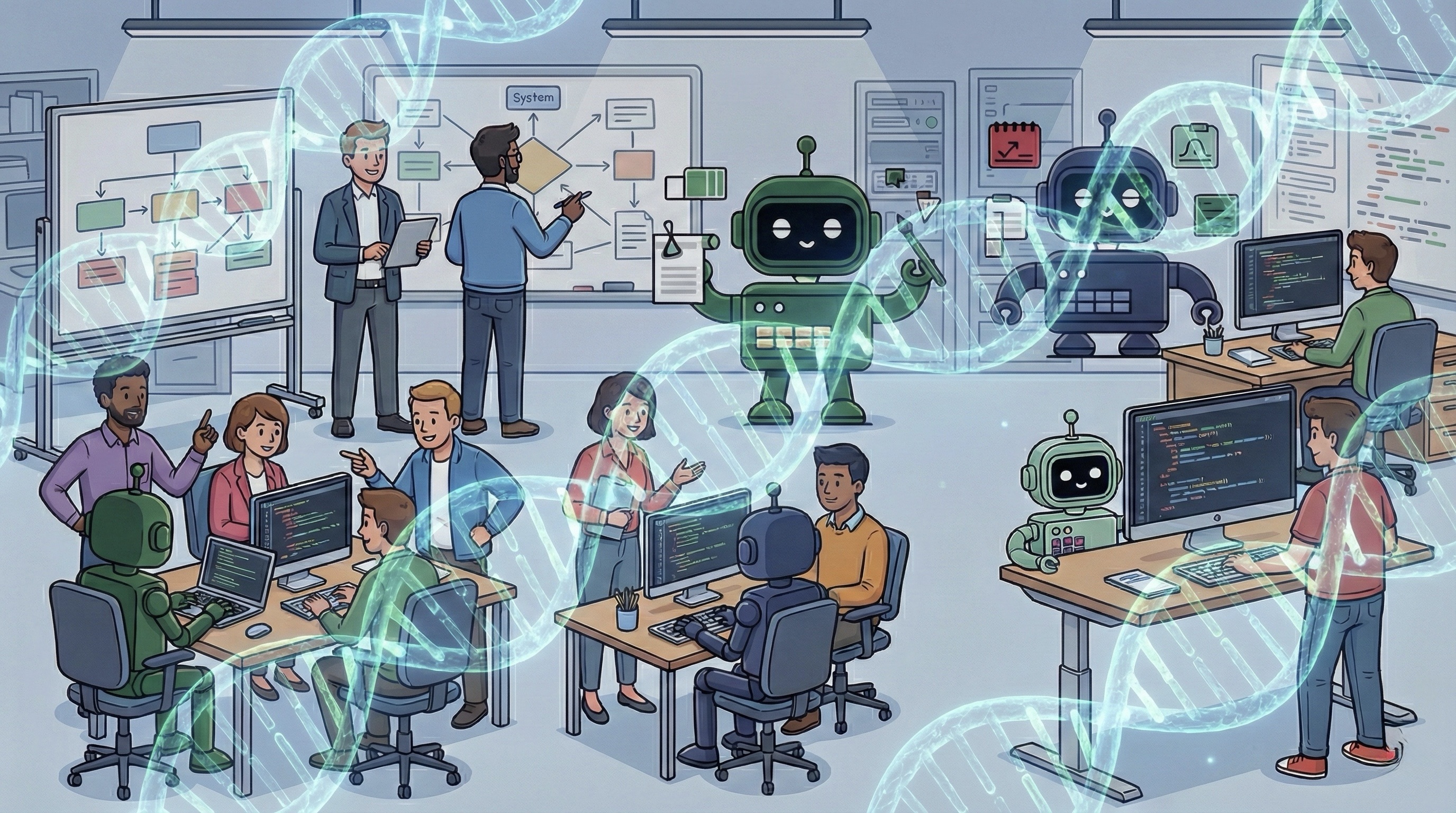

Enterprise AI collaboration isn't a single line between a human and an agent. In practice, three layers emerge: human-to-agent (the foundation), human-to-human (review, authority, organizational context), and agent-to-agent (specialized orchestration at scale). We call this the double helix: two collaboration strands running in parallel, connected by human-agent junction points. Most platforms optimize the junction points while ignoring the strands. That's where enterprise implementations quietly fail.

Why "human-in-the-loop" alone isn't enough

Everyone draws the same picture when they talk about AI collaboration. A human on one side, an AI agent on the other, a line connecting them. Human-in-the-loop. Simple, clean, intuitive.

And incomplete, at least for how enterprise software teams actually work.

After months of working with our first enterprise clients on the FairMind platform, we observed something that none of the existing frameworks quite capture:

- The human-agent relationship is real and foundational, but it's only the starting point

- When that relationship enters a real organization, it inevitably activates two additional layers of collaboration

- Most platforms ignore these layers entirely

We think of this as the double helix of AI collaboration. And if you're building or adopting AI platforms for enterprise teams, understanding it changes everything about how you design your product and your workflows.

The foundation: human-agent collaboration

Let's start with what the industry gets right. The direct collaboration between a human and an AI agent is the essential building block:

- A developer working with an AI agent to produce a solution design

- An architect using an agent to analyze infrastructure options

- A tech lead leveraging an agent to generate test strategies

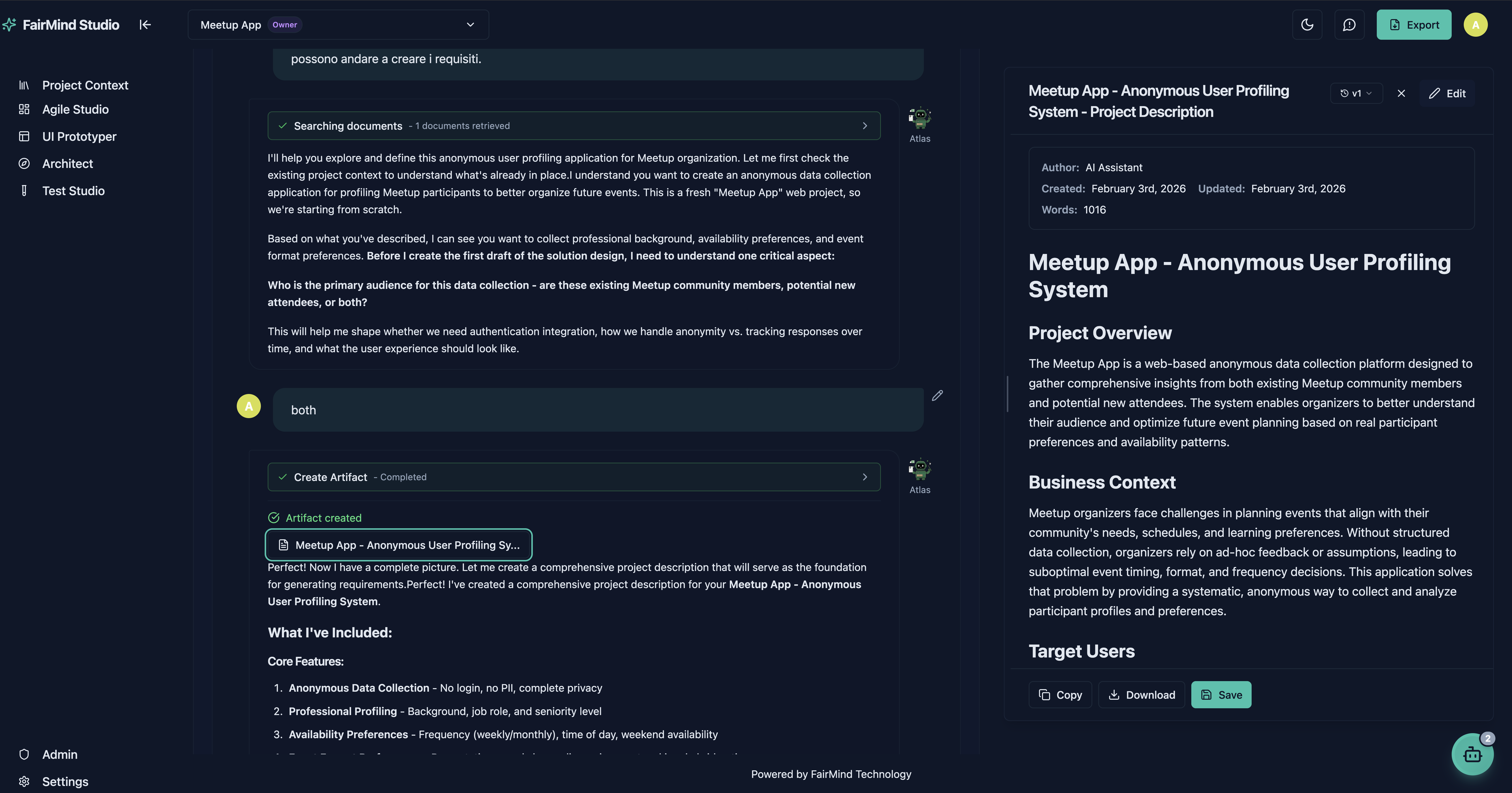

This layer is well understood, extensively studied, and the focus of virtually every AI platform on the market. At FairMind, it's where our specialized agents (NOVA for solution design, SAGE for code review, ATLAS for architecture analysis, and others) meet the humans who work with them daily.

But here's the thing: in enterprise software development, this foundation is necessary and insufficient. Because the moment a human receives an agent's output, two things happen that the human-in-the-loop model doesn't account for.

What we observed in the field

Here's a pattern we've seen repeatedly with our clients:

- ATLAS produces a detailed technical solution design for a developer on the team

- The developer reviews it, the human-agent layer working exactly as designed

- But the document touches architectural decisions that require input from a senior colleague

So the developer shares the deliverable with that colleague. They discuss it. They challenge assumptions. They add organizational context that no AI agent could have known: political constraints, legacy system quirks, upcoming migrations, team capacity.

That's the first emergent layer: human-to-human collaboration, activated by the original human-agent interaction.

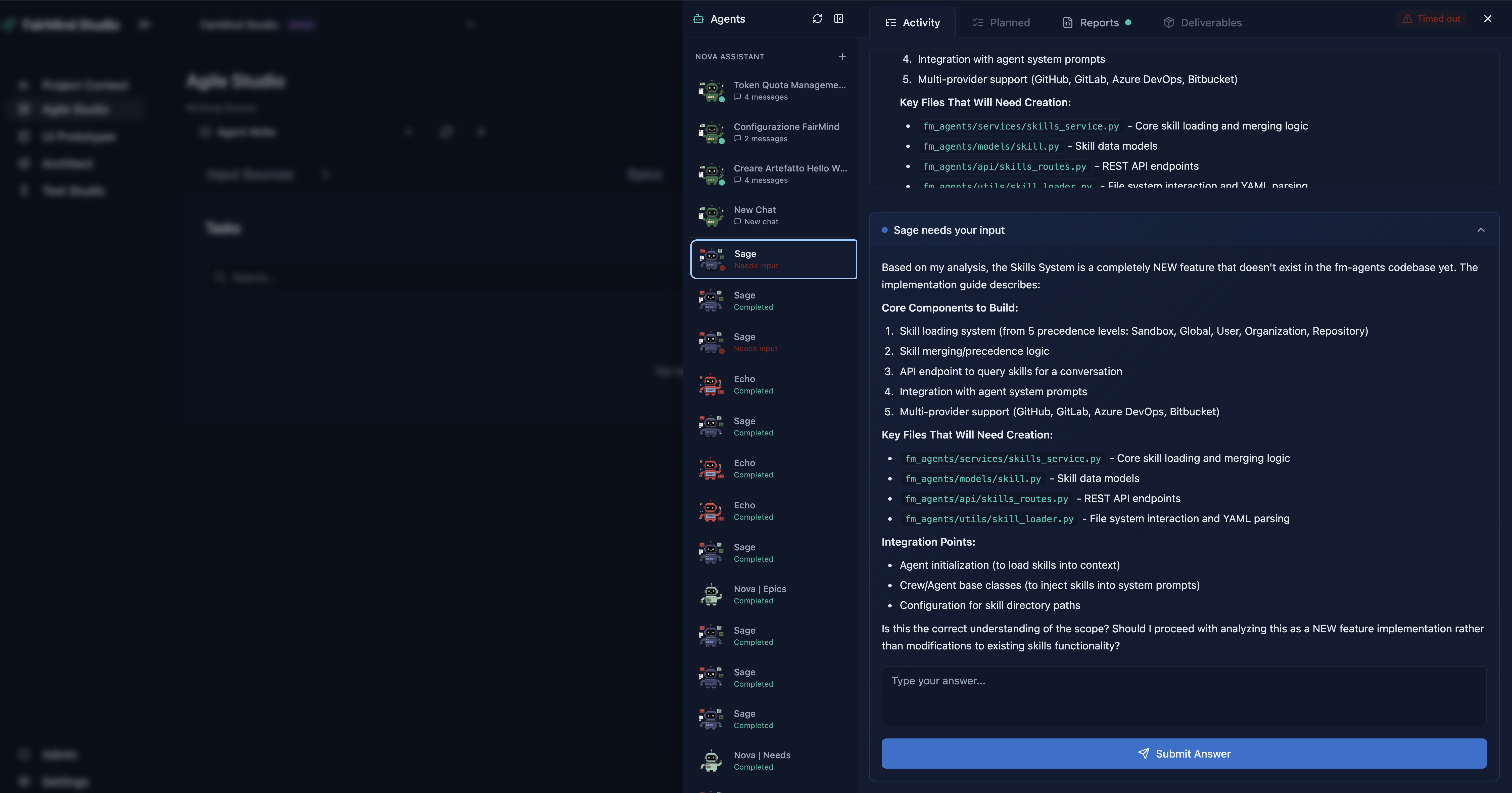

Then the feedback needs to flow back to the agent, not as a vague "try again," but as structured, contextualized input that allows the agent to produce a meaningfully better second iteration. And sometimes, the senior colleague invokes a different agent (perhaps one specialized in security review or infrastructure planning) to validate a specific aspect before the whole cycle continues.

That's the second emergent layer: agent-to-agent collaboration, triggered by the human decision to bring additional specialized capabilities into the workflow.

What started as a single line between a human and an agent has become a double helix.

Three layers, one structure

Let's be precise about what we're describing.

Layer 1: Human-to-Agent (the foundation)

The direct interaction where humans and AI agents work together on specific tasks. Every AI platform focuses here. It's necessary, well understood, and it works.

Layer 2: Human-to-Human (review & authority)

Where review, validation, and organizational decision-making happen. This is where authority lives, where institutional knowledge enters the process, where accountability is exercised. It follows the social and hierarchical dynamics of the organization and operates at a deliberate pace because it involves judgment, negotiation, and trust.

Layer 3: Agent-to-Agent (orchestration at scale)

Where specialized AI agents decompose problems, delegate subtasks, share context, and produce artifacts. It follows technical orchestration logic and operates fast, often asynchronously, and at a scale that would be impossible for humans alone.

The critical insight: the second and third layers are not optional extensions. They emerge inevitably the moment you deploy AI agents in a real organization with real teams. They have different speeds, different governance models, different failure modes, and different trust dynamics.

Yet almost every framework in the current landscape, from ChatCollab to MetaGPT to AutoGen, treats all participants, human or AI, as nodes in one flat collaboration structure. The gap between the academic literature on multi-agent systems and the literature on human-AI teaming is precisely where enterprise reality lives.

The double helix: a structural metaphor

In molecular biology, DNA consists of two strands running in parallel, connected at regular intervals by base pairs. Neither strand functions alone. The information that encodes life emerges from their complementary interaction.

In our model:

- The two strands = human-to-human collaboration layer + agent-to-agent collaboration layer

- The base pairs = human-to-agent interactions (the junction points where information crosses from one strand to the other)

- The human strand carries organizational knowledge, authority, judgment, and accountability

- The agent strand carries computational power, consistency, specialization, and speed

The industry has spent years optimizing the base pairs (making human-agent interaction smoother, more natural, more productive) while largely ignoring the strands they connect. But base pairs without strands are just isolated chemical bonds. It's the strands that give them structure, direction, and meaning.

The junction points: where value is created or destroyed

The most consequential moments happen where a deliverable crosses from one strand to the other:

Agent → Human strand

The output needs contextual translation. The humans reviewing it need to understand not just what the agent produced, but why it made certain choices, what alternatives it considered, what assumptions it relied on. Without this, the human review becomes either rubber-stamping (too much trust) or a frustrating black-box interrogation (too little trust).

Human → Agent strand

The feedback needs structure. A conversation between two engineers might produce a rich, nuanced set of insights, but an AI agent can't act on "we talked about it and think the approach should be different." The feedback needs to be decomposed into specific, actionable parameters.

These junction points are where most enterprise AI implementations quietly fail. Not because the agents are bad, and not because the humans are resistant, but because nobody designed the handoff.

What this means for platform design

Once you see the full structure, several design implications become clear.

1. Collaboration features for humans, not just H-A interfaces

Most AI platforms treat the human as an isolated operator. But if the human's next step is to share output with a colleague, annotate it, discuss it, and then redirect feedback to an agent, the platform needs to support that entire flow. This is why at FairMind we've released human-to-human collaboration capabilities directly within our platform, so that review, validation, and decision-making don't have to escape to email, Slack, or hallway conversations where context gets lost.

2. Explicit handoff protocols at every junction point

Every time a deliverable crosses from one strand to the other, the system should capture the right metadata: agent reasoning, constraints applied, confidence level; and going back, what the humans decided and why. In FairMind, our agents pause at key decision points and explicitly request human feedback before proceeding. That feedback can arrive minutes or hours later. The agent waits, preserving its full reasoning state, and resumes with the enriched input. Beyond these checkpoints, humans can also co-edit the artifacts our agents produce: epics, user stories, tasks, architectural solutions, and more.

3. Full structure visibility

At any point, every participant should see where they sit in the double helix. Today on FairMind, users can inspect the full reasoning chain of every agent and intervene directly on agent memory. Our new collaboration features enable multiple humans to collaboratively shape the memory and context of a single agent, so that the collective intelligence of the human strand feeds directly into the agent strand.

Looking ahead

The companies that will get the most value from AI agents won't be the ones with the smartest individual agents or the smoothest chat interfaces. They'll be the ones that design their workflows around the double helix: two collaboration strands running in parallel, connected by well-designed junction points, producing outcomes that no single layer could achieve alone.

At FairMind, this is not just a conceptual framework. It's our product roadmap. We are committed to making the handoffs between human and agent, agent and human, and agent and agent progressively more explicit, traceable, and governable. And we are investing heavily in making human-to-human collaboration on agent-generated artifacts as seamless as possible, because that's the strand the rest of the industry is neglecting.

Stay at the Forefront.

Subscribe to FairMind's newsletter for exclusive insights on AI-powered development, agent workflows and real-world MCP use cases, once a month in your inbox. No noise, only signal. → Subscribe now

#AI #SoftwareDevelopment #Enterprise #CodeGeneration #DevOps #TechInnovation #SoftwareEngineering #AgileManagement #DigitalTransformation

Ready to Transform Your Enterprise Software Development?

Join the organizations using FairMind to revolutionize how they build, maintain, and evolve software.